On Wednesday, MLCommons, a prominent artificial intelligence benchmarking group, announced a fresh suite of tests and outcomes aimed at evaluating the speed of cutting-edge hardware in running AI applications and promptly responding to user queries.

The latest benchmarks introduced by MLCommons focus on assessing the swiftness with which AI chips and systems can generate responses from robust AI models inundated with data.

These results offer insights into how rapidly AI applications like ChatGPT can respond to user inquiries.

Among the newly added benchmarks is Llama 2, which measures the efficiency of question-and-answer scenarios for large language models boasting 70 billion parameters. This benchmark, developed by Meta Platforms, enhances MLCommons’ repertoire of testing tools.

MLCommons incorporated a second text-to-image generator into its benchmarking suite, known as MLPerf, which is based on Stability AI’s Stable Diffusion XL model.

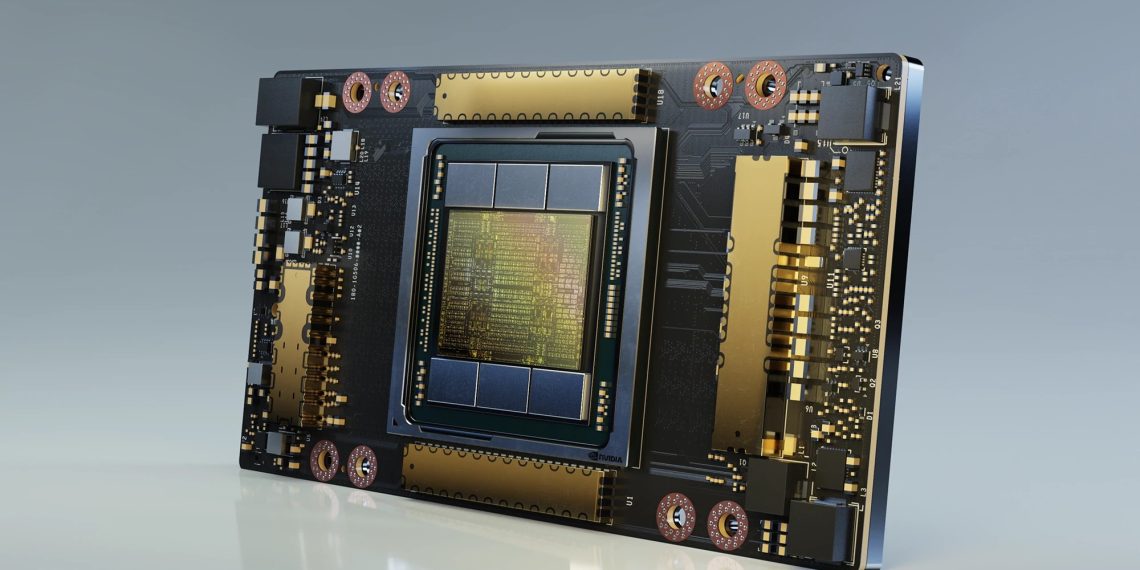

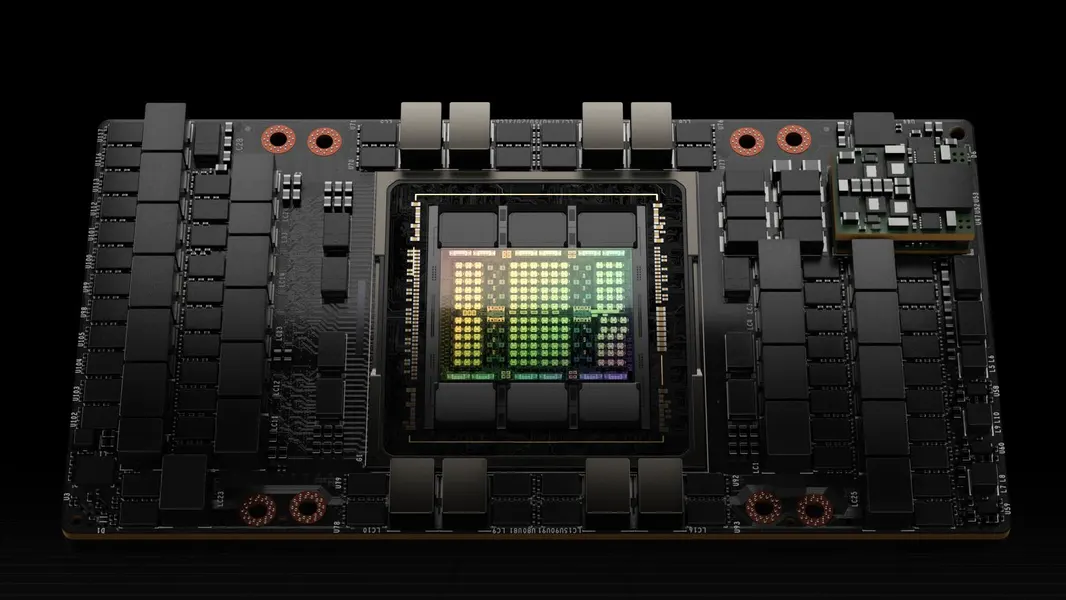

Servers featuring Nvidia‘s H100 chips, crafted by industry leaders like Alphabet‘s Google, Supermicro, and Nvidia itself, emerged as frontrunners in both newly introduced benchmarks, showcasing superior raw performance.

Additionally, server manufacturers submitted designs featuring Nvidia’s L40S chip, albeit less powerful compared to the H100.

Krai, a server builder, submitted a design for the image generation benchmark featuring a Qualcomm AI chip known for its lower power consumption relative to Nvidia’s advanced processors. Intel also contributed a design based on its Gaudi2 accelerator chips, delivering commendable performance.

While raw performance is important, energy efficiency is also a significant factor in deploying AI applications.

MLCommons recognizes this by offering a separate benchmark category dedicated to measuring power consumption, addressing the imperative need for optimal performance with minimal energy usage in AI hardware deployment.