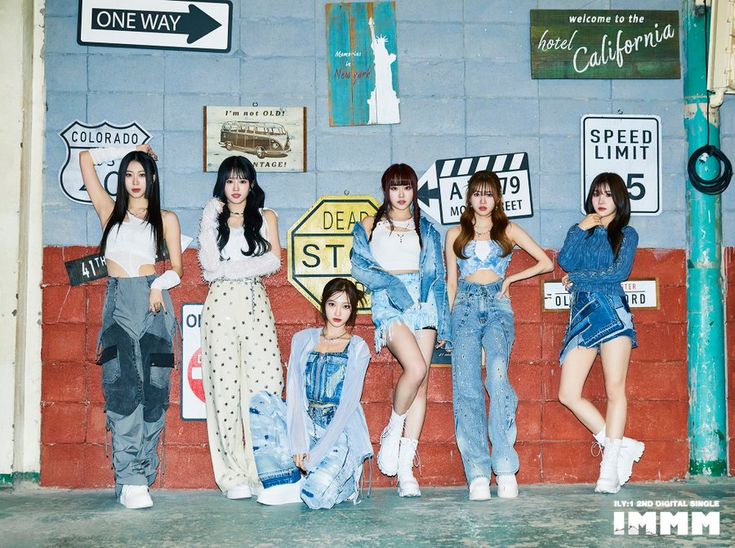

FCENM is stepping up to combat a troubling trend affecting their artists. The label has announced plans to take legal action against deepfake videos involving ILY:1. These AI-generated videos pose a significant threat to the group’s reputation and well-being.

On August 31, FCENM addressed the issue publicly, condemning the distribution of these synthetic videos. The label emphasized that such actions not only defame the artists but also constitute serious crimes. They stated, “AI-based synthetic videos targeting our artists are being distributed online. These acts seriously damage the artist’s reputation, and we are taking this very seriously.”

The label has committed to pursuing strong legal responses. They are gathering evidence and working closely with their legal team to address the issue decisively. FCENM aims to protect its artists’ rights and honour, making it clear that it will not tolerate any illegal activities that infringe upon these rights.

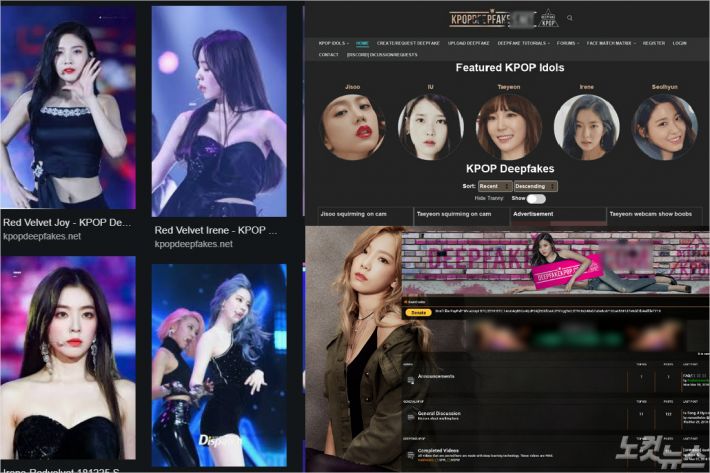

The rise of deepfake technology presents a growing challenge, particularly in the entertainment industry. Deepfake videos, which are digitally manipulated to create misleading or false representations, have become a significant concern. These videos can cause deep emotional trauma and are considered a form of harassment. The problem is exacerbated for female celebrities, who are disproportionately targeted by these unethical practices.

A report from 2019 revealed that 96 per cent of deepfake videos were obscene, with a significant portion featuring Korean pop stars. This troubling trend highlights the vulnerability of female K-pop idols, with several ranking among the top targets for deepfake content.

The recent controversy surrounding leaked private photos of MOMOLAND’s Nancy and other instances of “spycam” issues have brought the problem into sharper focus. In response, South Korean netizens have rallied for stricter punishments for those involved in creating and disseminating deepfake content. The hashtag #Deepfake_StrictPunishment has gained traction, and a petition calling for harsher penalties has garnered over 200,000 signatures.

The urgent need for government action to address deepfake culture and protect victims has never been clearer. FCENM’s proactive stance against these videos is an important step in safeguarding artists’ reputations and ensuring their well-being.

Understanding Deepfakes in K-pop

Deepfakes involve the use of artificial intelligence to create manipulated videos that misrepresent individuals. In K-pop, this technology has been used to produce harmful and misleading content featuring idols, often in obscene contexts. The impact of deepfakes on K-pop stars is profound, leading to emotional distress and invasion of privacy.

The ethical and moral implications of deepfakes are severe, as they distort reality and exploit the identities of celebrities. The rise of such content has prompted increased calls for legal action and stricter regulations to protect the victims. Companies like FCENM are leading efforts to combat this issue, but broader government intervention is needed to effectively address the problem and provide support for affected individuals